Creating Workflow blocks¶

Workflows blocks development requires an understanding of the Workflow Ecosystem. Before diving deeper into the details, let's summarize the required knowledge:

Understanding of Workflow execution, in particular:

-

what is the relation of Workflow blocks and steps in Workflow definition

-

how Workflow blocks and their manifests are used by Workflows Compiler

-

what is the

dimensionality levelof batch-oriented data passing through Workflow -

how Execution Engine interacts with step, regarding its inputs and outputs

-

what is the nature and role of Workflow

kinds -

understanding how

pydanticworks

Environment setup¶

As you will soon see, creating a Workflow block is simply a matter of defining a Python class that implements a specific interface. This design allows you to run the block using the Python interpreter, just like any other Python code. However, you may encounter difficulties when assembling all the required inputs, which would normally be provided by other blocks during Workflow execution. Therefore, it's important to set up the development environment properly for a smooth workflow. We recommend following these steps as part of the standard development process (initial steps can be skipped for subsequent contributions):

-

Set up the

condaenvironment and install main dependencies ofinference, as described ininferencecontributor guide. -

Familiarize yourself with the organization of the Workflows codebase.

Workflows codebase structure - cheatsheet

Below are the key packages and directories in the Workflows codebase, along with their descriptions:

-

inference/core/workflows- the main package for Workflows. -

inference/core/workflows/core_steps- contains Workflow blocks that are part of the Roboflow Core plugin. At the top levels, you'll find block categories, and as you go deeper, each block has its own package, with modules hosting different versions, starting fromv1.py -

inference/core/workflows/execution_engine- contains the Execution Engine. You generally won’t need to modify this package unless contributing to Execution Engine functionality. -

tests/workflows/- the root directory for Workflow tests -

tests/workflows/unit_tests/- suites of unit tests for the Workflows Execution Engine and core blocks. This is where you can test utility functions used in your blocks. -

tests/workflows/integration_tests/- suites of integration tests for the Workflows Execution Engine and core blocks. You can run end-to-end (E2E) tests of your workflows in combination with other blocks here.

-

-

Create a minimalistic block – You’ll learn how to do this in the following sections. Start by implementing a simple block manifest and basic logic to ensure the block runs as expected.

-

Add the block to the plugin – Once your block is created, add it to the list of blocks exported from the plugin. If you're adding the block to the Roboflow Core plugin, make sure to add an entry for your block in the loader.py. If you forget this step, your block won’t be visible!

-

Iterate and refine your block – Continue developing and running your block until you’re satisfied with the results. The sections below explain how to iterate on your block in various scenarios.

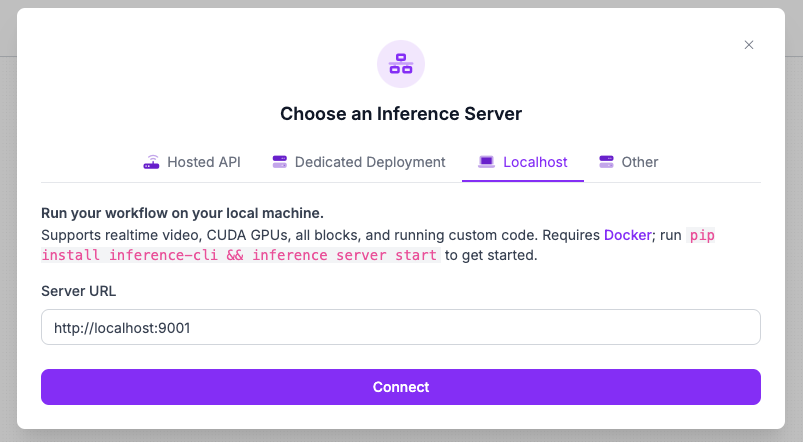

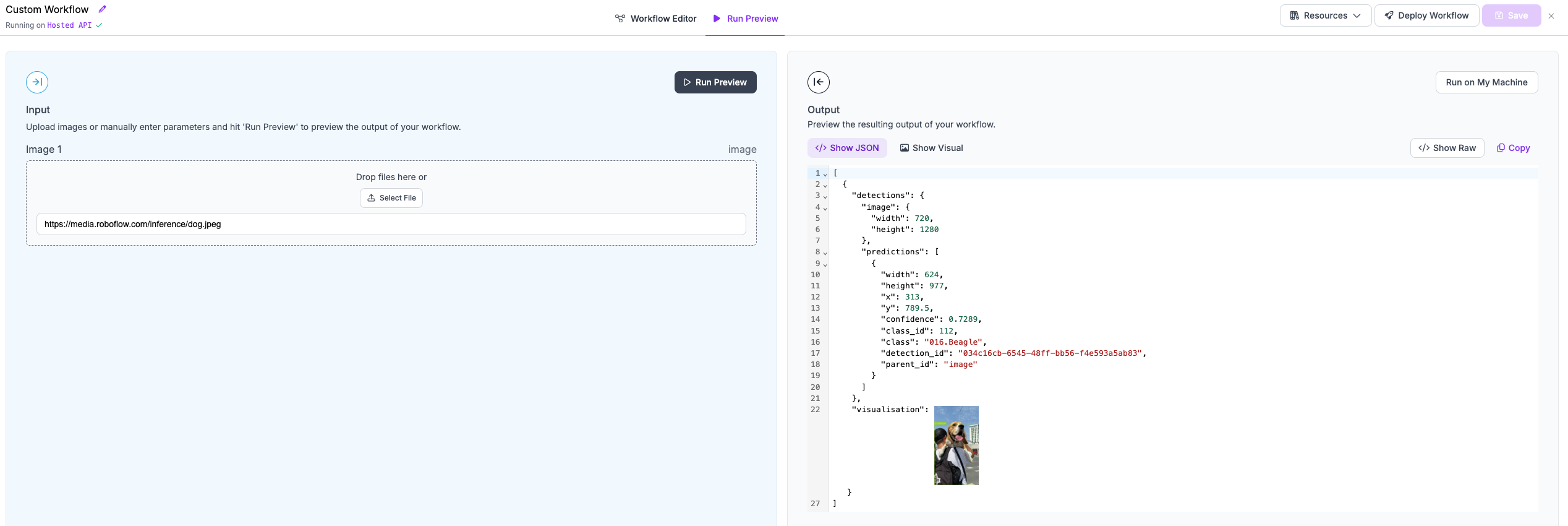

Running your blocks using Workflows UI¶

We recommend running the inference server with a mounted volume (which is much faster than re-building inference

server on each change):

inference_repo$ docker run -p 9001:9001 \

-v ./inference:/app/inference \

roboflow/roboflow-inference-server-cpu:latest

to quickly run previews:

My block requires extra dependencies - I cannot use pre-built inference server

It's natural that your blocks may sometimes require additional dependencies. To add a dependency, simply include it in one of the

requirements fileshat are installed in the relevant Docker image

(usually the

CPU build

of the inference server).

Afterward, run:

1 2 3 | |

You can then run your local build by specifying the test tag you just created:

1 2 3 | |

Running your blocks without Workflows UI¶

For contributors without access to the Roboflow platform, we recommend running the server as mentioned in the section above. However, instead of using the UI editor, you will need to create a simple Workflow definition and send a request to the server.

Running your Workflow without UI

The following code snippet demonstrates how to send a request to the inference server to run a Workflow.

The inference_sdk is included with the inference package as a lightweight client library for our server.

from inference_sdk import InferenceHTTPClient

YOUR_WORKFLOW_DEFINITION = ...

client = InferenceHTTPClient(

api_url=object_detection_service_url,

api_key="XXX", # optional, only required if Workflow uses Roboflow Platform

)

result = client.run_workflow(

specification=YOUR_WORKFLOW_DEFINITION,

images={

"image": your_image_np, # this is example input, adjust it

},

parameters={

"my_parameter": 37, # this is example input, adjust it

},

)

Recommended way for regular contributors¶

Creating integration tests in the tests/workflows/integration_tests/execution directory is a natural part of the

development iteration process. This approach allows you to develop and test simultaneously, providing valuable

feedback as you refine your code. Although it requires some experience, it significantly enhances

long-term code maintenance.

The process is straightforward:

-

Create a New Test Module: For example, name it

test_workflows_with_my_custom_block.py. -

Develop Example Workflows: Create one or more example Workflows. It would be best if your block cooperates with other blocks from the ecosystem.

-

Run Tests with Sample Data: Execute these Workflows in your tests using sample data (you can explore our fixtures to find example data we usually use).

-

Assert Expected Results: Validate that the results match your expectations.

By incorporating testing into your development flow, you ensure that your block remains stable over time and effectively interacts with existing blocks, enhancing the expressiveness of your work!

You can run your test using the following command:

pytest tests/workflows/integration_tests/execution/test_workflows_with_my_custom_block

Integration test template

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 | |

-

In line

2, you’ll find themodel_managerfixture, which is typically required by model blocks. This fixture provides theModelManagerabstraction frominference, used for loading and unloading models. -

Line

3defines a fixture that includes an image of two dogs (explore other fixtures to find more example images). -

Line

4is an optional fixture you may want to use if any of the blocks in your tested workflow require a Roboflow API key. If that’s the case, export theROBOFLOW_API_KEYenvironment variable with a valid key before running the test. -

Lines

7-11provide the setup for the initialization parameters of the blocks that the Execution Engine will create at runtime, based on your Workflow definition. -

Lines

19-23demonstrate how to run a Workflow by injecting input parameters. Please ensure that the keys in runtime_parameters match the inputs declared in your Workflow definition. -

Starting from line

26, you’ll find example assertions within the test.

Prototypes¶

To create a Workflow block you need some amount of imports from the core of Workflows library. Here is the list of imports that you may find useful while creating a block:

from inference.core.workflows.execution_engine.entities.base import (

Batch, # batches of data will come in Batch[X] containers

OutputDefinition, # class used to declare outputs in your manifest

WorkflowImageData, # internal representation of image

# - use whenever your input kind is image

)

from inference.core.workflows.prototypes.block import (

BlockResult, # type alias for result of `run(...)` method

WorkflowBlock, # base class for your block

WorkflowBlockManifest, # base class for block manifest

)

from inference.core.workflows.execution_engine.entities.types import *

# module with `kinds` from the core library

The most important are:

-

WorkflowBlock- base class for your block -

WorkflowBlockManifest- base class for block manifest

Understanding internal data representation

You may have noticed that we recommend importing the Batch and WorkflowImageData classes, which are

fundamental components used when constructing building blocks in our system. For a deeper understanding of

how these classes fit into the overall architecture, we encourage you to refer to the

Data Representations page for more detailed information.

Block manifest¶

A manifest is a crucial component of a Workflow block that defines a prototype for step declaration that can be placed in a Workflow definition to use the block. In particular, it:

-

Uses

pydanticto power syntax parsing of Workflows definitions: It inherits frompydantic BaseModelfeatures to parse and validate Workflow definitions. This schema can also be automatically exported to a format compatible with the Workflows UI, thanks topydantic'sintegration with the OpenAPI standard. -

Defines Data Bindings: It specifies which fields in the manifest are selectors for data flowing through the workflow during execution and indicates their kinds.

-

Describes Block Outputs: It outlines the outputs that the block will produce.

-

Specifies Dimensionality: It details the properties related to input and output dimensionality.

-

Indicates Batch Inputs and Empty Values: It informs the Execution Engine whether the step accepts batch inputs and empty values.

-

Ensures Compatibility: It dictates the compatibility with different Execution Engine versions to maintain stability. For more details, see versioning.

Scaffolding for manifest¶

To understand how manifests work, let's define one step-by-step. The example block that we build here will be calculating images similarity. We start from imports and class scaffolding:

from typing import Literal

from inference.core.workflows.prototypes.block import (

WorkflowBlockManifest,

)

class ImagesSimilarityManifest(WorkflowBlockManifest):

type: Literal["my_plugin/images_similarity@v1"]

name: str

This is the minimal representation of a manifest. It defines two special fields that are important for Compiler and Execution engine:

-

type- required to parse syntax of Workflows definitions based on dynamic pool of blocks - this is thepydantictype discriminator that lets the Compiler understand which block manifest is to be verified when parsing specific steps in a Workflow definition -

name- this property will be used to give the step a unique name and let other steps selects it via selectors

Adding inputs¶

We want our step to take two inputs with images to be compared.

Adding inputs

Let's see how to add definitions of those inputs to manifest:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 | |

-

in the lines

2-9, we've added a couple of imports to ensure that we have everything needed -

line

20definesimage_1parameter - as manifest is prototype for Workflow Definition, the only way to tell about image to be used by step is to provide selector - we have a specialised type in core library that can be used -Selector. If you look deeper into codebase, you will discover this is type alias constructor function - tellingpydanticto expect string matching$inputs.{name}and$steps.{name}.*patterns respectively, additionally providing extra schema field metadata that tells Workflows ecosystem components that thekindof data behind selector is image. important note: we denote kind as list - the list of specific kinds is interpreted as union of kinds by Execution Engine. -

denoting

pydanticField(...)attribute in the last parts of line20is optional, yet appreciated, especially for blocks intended to cooperate with Workflows UI -

starting in line

23, you can find definition ofimage_2parameter which is very similar toimage_1.

Such definition of manifest can handle the following step declaration in Workflow definition:

{

"type": "my_plugin/images_similarity@v1",

"name": "my_step",

"image_1": "$inputs.my_image",

"image_2": "$steps.image_transformation.image"

}

This definition will make the Compiler and Execution Engine:

-

initialize the step from Workflow block declaring type

my_plugin/images_similarity@v1 -

supply two parameters for the steps run method:

-

input_1of typeWorkflowImageDatawhich will be filled with image submitted as Workflow execution input namedmy_image. -

imput_2of typeWorkflowImageDatawhich will be generated at runtime, by another step calledimage_transformation

Adding parameters to the manifest¶

Let's now add the parameter that will influence step execution.

Adding parameter to the manifest

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 | |

-

line

9importsfloat_zero_to_onekinddefinition which will be used to define the parameter. -

in line

27we start defining parameter calledsimilarity_threshold. Manifest will accept either float values or selector to workflow input ofkindfloat_zero_to_one, imported in line9.

Such definition of manifest can handle the following step declaration in Workflow definition:

1 2 3 4 5 6 7 | |

or alternatively:

1 2 3 4 5 6 7 | |

Declaring block outputs¶

We have successfully defined inputs for our block, but we are still missing couple of elements required to successfully run blocks. Let's define block outputs.

Declaring block outputs

Minimal set of information required is outputs description. Additionally, to increase block stability, we advise to provide information about execution engine compatibility.

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 | |

-

line

5imports class that is used to describe step outputs -

line

11importsbooleankindto be used in outputs definitions -

lines

32-39declare class method to specify outputs from the block - each entry in list declare one return property for each batch element and itskind. Our block will return boolean flagimages_matchfor each pair of images. -

lines

41-43declare compatibility of the block with Execution Engine - see versioning page for more details

As a result of those changes:

-

Execution Engine would understand that steps created based on this block are supposed to deliver specified outputs and other steps can refer to those outputs in their inputs

-

the blocks loading mechanism will not load the block given that Execution Engine is not in version

v1

LEARN MORE: Dynamic outputs

Some blocks may not be able to arbitrailry define their outputs using classmethod - regardless of the content of step manifest that is available after parsing. To support this we introduced the following convention:

-

classmethod

describe_outputs(...)shall return list with one element of name*and kind*(akaWILDCARD_KIND) -

additionally, block manifest should implement instance method

get_actual_outputs(...)that provides list of actual outputs that can be generated based on filled manifest data

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 | |

Definition of block class¶

At this stage, the manifest of our simple block is ready, we will continue with our example. You can check out the advanced topics section for more details that would just be a distractions now.

Base implementation¶

Having the manifest ready, we can prepare baseline implementation of the block.

Block scaffolding

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 | |

-

lines

1,5-6and8-11added changes into import surtucture to provide additional symbols required to properly define block class and all of its methods signatures -

lines

53-55defines class methodget_manifest(...)to simply return the manifest class we cretaed earlier -

lines

57-63definerun(...)function, which Execution Engine will invoke with data to get desired results. Please note that manifest fields defining inputs of image kind are marked asWorkflowImageData- which is compliant with intenal data representation ofimagekind described in kind documentation.

Providing implementation for block logic¶

Let's now add an example implementation of the run(...) method to our block, such that

it can produce meaningful results.

Note

The Content of this section is supposed to provide examples on how to interact with the Workflow ecosystem as block creator, rather than providing robust implementation of the block.

Implementation of run(...) method

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 | |

-

in line

3we import OpenCV -

lines

55-57defines block constructor, thanks to this - state of block is initialised once and live through consecutive invocation ofrun(...)method - for instance when Execution Engine runs on consecutive frames of video -

lines

69-80provide implementation of block functionality - the details are trully not important regarding Workflows ecosystem, but there are few details you should focus:-

lines

69and70make use ofWorkflowImageDataabstraction, showcasing hownumpy_imageproperty can be used to getnp.ndarrayfrom internal representation of images in Workflows. We advise to expole remaining properties ofWorkflowImageDatato discover more. -

result of workflow block execution, declared in lines

78-80is in our case just a dictionary with the keys being the names of outputs declared in manifest, in line43. Be sure to provide all declared outputs - otherwise Execution Engine will raise error.

-

Exposing block in plugin¶

Now, your block is ready to be used, but Execution Engine is not aware of its existence. This is because no registered

plugin exports the block you just created. Details of blocks bundling are be covered in separate page,

but the remaining thing to do is to add block class into list returned from your plugins' load_blocks(...) function:

# __init__.py of your plugin (or roboflow_core plugin if you contribute directly to `inference`)

from my_plugin.images_similarity.v1 import ImagesSimilarityBlock

# this is example import! requires adjustment

def load_blocks():

return [ImagesSimilarityBlock]

Advanced topics¶

Blocks processing batches of inputs¶

Sometimes, performance of your block may benefit if all input data is processed at once as batch. This may happen for models running on GPU. Such mode of operation is supported for Workflows blocks - here is the example on how to use it for your block.

Implementation of blocks accepting batches

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 | |

-

line

13importsBatchfrom core of workflows library - this class represent container which is veri similar to list (but read-only) to keep batch elements -

lines

40-42define class method that changes default behaviour of the block and make it capable to process batches - we are marking each parameter that therun(...)method recognizes as batch-oriented. -

changes introduced above made the signature of

run(...)method to change, nowimage_1andimage_2are not instances ofWorkflowImageData, but rather batches of elements of this type. Important note: having multiple batch-oriented parameters we expect that those batches would have the elements related to each other at corresponding positions - such that our block comparingimage_1[1]intoimage_2[1]actually performs logically meaningful operation. -

lines

74-77,85-86present changes that needed to be introduced to run processing across all batch elements - showcasing how to iterate over batch elements if needed -

it is important to note how outputs are constructed in line

85- each element of batch will be given its entry in the list which is returned fromrun(...)method. Order must be aligned with order of batch elements. Each output dictionary must provide all keys declared in block outputs.

Inputs that accept both batches and scalars

It is relatively unlikely, but may happen that your block would need to accept both batch-oriented data

and scalars within a single input parameter. Execution Engine recognises that using

get_parameters_accepting_batches_and_scalars(...) method of block manifest. Take a look at the

example provided below:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 | |

-

lines

20-22specify manifest parameters that are expected to accept mixed (both scalar and batch-oriented) input data - point out that at this stage there is no difference in definition compared to previous examples. -

lines

24-26specifyget_parameters_accepting_batches_and_scalars(...)method to tell the Execution Engine that blockrun(...)method can handle both scalar and batch-oriented inputs for the specified parameters. -

lines

45-47depict the parameters of mixed nature inrun(...)method signature. -

line

49reveals that we must keep track of the expected output size within the block logic. That's why it is quite tricky to implement blocks with mixed inputs. Normally, when blockrun(...)method operates on scalars - in majority of cases (exceptions will be described below) - the metod constructs single output dictionary. Similairly, when batch-oriented inputs are accepted - those inputs define expected output size. In this case, however, we must manually detect batches and catch their sizes. -

lines

50-54showcase how we usually deal with mixed parameters - applying different logic when batch-oriented data is detected -

as mentioned earlier, output construction must also be adjusted to the nature of mixed inputs - which is illustrated in lines

65-70

Implementation of flow-control block¶

Flow-control blocks differs quite substantially from other blocks that just process the data. Here we will show how to create a flow control block, but first - a little bit of theory:

-

flow-control block is the block that declares compatibility with step selectors in their manifest (selector to step is defined as

$steps.{step_name}- similar to step output selector, but without specification of output name) -

flow-control blocks cannot register outputs, they are meant to return

FlowControlobjects -

FlowControlobject specify next steps (from selectors provided in step manifest) that for given batch element (SIMD flow-control) or whole workflow execution (non-SIMD flow-control) should pick up next

Implementation of flow-control

Example provides and comments out implementation of random continue block

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 | |

-

line

10imports type annotation for step selector which will be used to notify Execution Engine that the block controls the flow -

line

14importsFlowControlclass which is the only viable response from flow-control block -

line

28defines list of step selectors which effectively turns the block into flow-control one -

lines

55and56show how to construct output -FlowControlobject accept context beingNone,stringorlist of strings-Nonerepresent flow termination for the batch element, strings are expected to be selectors for next steps, passed in input.

Implementation of flow-control - batch variant

Example provides and comments out implementation of random continue block

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 | |

-

line

11imports type annotation for step selector which will be used to notify Execution Engine that the block controls the flow -

line

15importsFlowControlclass which is the only viable response from flow-control block -

lines

29-32defines list of step selectors which effectively turns the block into flow-control one -

lines

38-40contain definition ofget_parameters_accepting_batches(...)method telling Execution Engine that blockrun(...)method expects batch-orientedimageparameter. -

line

59revels that we need to return flow-control guide for each and every element ofimagebatch. -

to achieve that end, in line

60we iterate over the contntent of batch. -

lines

61-63show how to construct output -FlowControlobject accept context beingNone,stringorlist of strings-Nonerepresent flow termination for the batch element, strings are expected to be selectors for next steps, passed in input.

Nested selectors¶

Some block will require list of selectors or dictionary of selectors to be

provided in block manifest field. Version v1 of Execution Engine supports only

one level of nesting - so list of lists of selectors or dictionary with list of selectors

will not be recognised properly.

Practical use cases showcasing usage of nested selectors are presented below.

Fusion of predictions from variable number of models¶

Let's assume that you want to build a block to get majority vote on multiple classifiers predictions - then you would like your run method to look like that:

# pseud-code here

def run(self, predictions: List[dict]) -> BlockResult:

predicted_classes = [p["class"] for p in predictions]

counts = Counter(predicted_classes)

return {"top_class": counts.most_common(1)[0]}

Nested selectors - models ensemble

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 | |

-

lines

23-26depict how to define manifest field capable of accepting list of selectors -

line

50shows what to expect as input to block'srun(...)method - list of objects which are representation of specific kind. If the block accepted batches, the input type ofpredictionsfield would beList[Batch[sv.Detections]

Such block is compatible with the following step declaration:

1 2 3 4 5 6 7 8 | |

Block with data transformations allowing dynamic parameters¶

Occasionally, blocks may need to accept group of "named" selectors, which names and values are to be defined by creator of Workflow definition. In such cases, block manifest shall accept dictionary of selectors, where keys serve as names for those selectors.

Nested selectors - named selectors

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 | |

-

lines

22-25depict how to define manifest field capable of accepting dictionary of selectors - providing mapping between selector name and value -

line

46shows what to expect as input to block'srun(...)method - dict of objects which are reffered with selectors. If the block accepted batches, the input type ofdatafield would beDict[str, Union[Batch[Any], Any]]. In non-batch cases, non-batch-oriented data referenced by selector is automatically broadcasted, whereas for blocks accepting batches -Batchcontainer wraps only batch-oriented inputs, with other inputs being passed as singular values.

Such block is compatible with the following step declaration:

1 2 3 4 5 6 7 8 | |

Practical implications will be the following:

-

under

data["a"]insiderun(...)you will be able to find model's predictions - likesv.Detectionsifmodel_1is object-detection model -

under

data["b"]insiderun(...), you will find value of input parameter namedmy_parameter

Inputs and output dimensionality vs run(...) method¶

The dimensionality of block inputs plays a crucial role in shaping the run(...) method’s signature, and that's

why the system enforces strict bounds on the differences in dimensionality levels between inputs

(with the maximum allowed difference being 1). This restriction is critical for ensuring consistency and

predictability when writing blocks.

If dimensionality differences weren't controlled, it would be difficult to predict the structure of

the run(...) method, making development harder and less reliable. That’s why validation of this property

is strictly enforced during the Workflow compilation process.

Similarly, the output dimensionality also affects the method signature and the format of the expected output. The ecosystem supports the following scenarios:

-

all inputs have the same dimensionality and outputs does not change dimensionality - baseline case

-

all inputs have the same dimensionality and output decreases dimensionality

-

all inputs have the same dimensionality and output increases dimensionality

-

inputs have different dimensionality and output is allowed to keep the dimensionality of reference input

Other combinations of input/output dimensionalities are not allowed to ensure consistency and to prevent ambiguity in the method signatures.

Impact of dimensionality on run(...) method - batches disabled

In this example, we perform dynamic crop of image based on predictions.

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 | |

-

in lines

28-30manifest class declares output dimensionality offset - value1should be understood as adding1to dimensionality level -

point out, that in line

63, block eliminates empty images from further processing but placingNoneinstead of dictionatry with outputs. This would utilise the same Execution Engine behaviour that is used for conditional execution - datapoint will be eliminated from downstream processing (unless steps requesting empty inputs are present down the line). -

in lines

64-65results for single inputimageandpredictionsare collected - it is meant to be list of dictionares containing all registered outputs as keys. Execution engine will understand that the step returns batch of elements for each input element and create nested sturcures of indices to keep track of during execution of downstream steps.

In this example, the block visualises crops predictions and creates tiles presenting all crops predictions in single output image.

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 | |

-

in lines

30-32manifest class declares output dimensionality offset - value-1should be understood as decreasing dimensionality level by1 -

in lines

34-36manifest class declaresrun(...)method inputs that will be subject to auto-batch casting ensuring that the signature is always stable. Auto-batch casting was introduced in Execution Enginev0.1.6.0 -

refer to changelog for more details.

-

in lines

53-55you can see the impact of output dimensionality decrease on the method signature. First two inputs (declared in line36) are artificially wrapped inBatch[]container, whereasscalar_parameterremains primitive type. This is done by Execution Engine automatically on output dimensionality decrease when all inputs have the same dimensionality to enable access to all elements occupying the last dimensionality level. Obviously, only elements related to the same element from top-level batch will be grouped. For instance, if you had two input images that you cropped - crops from those two different images will be grouped separately. -

lines

65-66illustrate how output is constructed - single value is returned and that value will be indexed by Execution Engine in output batch with reduced dimensionality

In this example, block merges detections which were predicted based on crops of original image - result is to provide single detections with all partial ones being merged.

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 | |

-

in lines

31-36manifest class declares input dimensionalities offset, indicatingimageparameter being top-level andimage_predictionsbeing nested batch of predictions -

whenever different input dimensionalities are declared, dimensionality reference property must be pointed (see lines

38-40) - this dimensionality level would be used to calculate output dimensionality - in this particular case, we specifyimage. This choice has an implication in the expected format of result - in the chosen scenario we are supposed to return single dictionary with all registered outputs keys. If our choice isimage_predictions, we would return list of dictionaries (of size equal to length ofimage_predictionsbatch). In other worlds,get_dimensionality_reference_property(...)which dimensionality level should be associated to the output. -

lines

63-64present impact of dimensionality offsets specified in lines31-36. It is clearly visible thatimage_predictionsis a nested batch regardingimage. Obviously, only nested predictions relevant for the specificimagesare grouped in batch and provided to the method in runtime. -

as mentioned earlier, line

69construct output being single dictionary, as we register output at dimensionality level ofimage(which was also shipped as single element)

Impact of dimensionality on run(...) method - batches enabled

In this example, we perform dynamic crop of image based on predictions.

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 | |

-

in lines

29-31manifest declares that block accepts batches of inputs -

in lines

33-35manifest class declares output dimensionality offset - value1should be understood as adding1to dimensionality level -

in lines

55-66, signature of input parameters reflects that therun(...)method runs against inputs of the same dimensionality and those inputs are provided in batches -

point out, that in line

70, block eliminates empty images from further processing but placingNoneinstead of dictionatry with outputs. This would utilise the same Execution Engine behaviour that is used for conditional execution - datapoint will be eliminated from downstream processing (unless steps requesting empty inputs are present down the line). -

construction of the output, presented in lines

71-73indicates two levels of nesting. First of all, block operates on batches, so it is expected to return list of outputs, one output for each input batch element. Additionally, this output element for each input batch element turns out to be nested batch - hence for each input iage and prediction, block generates list of outputs - elements of that list are dictionaries providing values for each declared output.

In this example, the block visualises crops predictions and creates tiles presenting all crops predictions in single output image.

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 | |

-

lines

29-31manifest that block is expected to take batches as input -

in lines

33-35manifest class declares output dimensionality offset - value-1should be understood as decreasing dimensionality level by1 -

in lines

52-53you can see the impact of output dimensionality decrease and batch processing on the method signature. First "layer" ofBatch[]is a side effect of the fact that manifest declared that block accepts batches of inputs. The second "layer" comes from output dimensionality decrease. Execution Engine wrapps up the dimension to be reduced into additionalBatch[]container porvided in inputs, such that programmer is able to collect all nested batches elements that belong to specific top-level batch element. -

lines

66-67illustrate how output is constructed - for each top-level batch element, block aggregates all crops and predictions and creates a single tile. As block accepts batches of inputs, this procedure end up with one tile for each top-level batch element - hence list of dictionaries is expected to be returned.

In this example, block merges detections which were predicted based on crops of original image - result is to provide single detections with all partial ones being merged.

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 | |

-

lines

31-33manifest that block is expected to take batches as input -

in lines

35-40manifest class declares input dimensionalities offset, indicatingimageparameter being top-level andimage_predictionsbeing nested batch of predictions -

whenever different input dimensionalities are declared, dimensionality reference property must be pointed (see lines

42-44) - this dimensionality level would be used to calculate output dimensionality - in this particular case, we specifyimage. This choice has an implication in the expected format of result - in the chosen scenario we are supposed to return single dictionary for each element ofimagebatch. If our choice isimage_predictions, we would return list of dictionaries (of size equal to length of nestedimage_predictionsbatch) for each inputimagebatch element. -

lines

66-67present impact of dimensionality offsets specified in lines35-40as well as the declararion of batch processing from lines32-34. First "layer" ofBatch[]container comes from the latter, nestedBatch[Batch[]]forimages_predictionscomes from the definition of input dimensionality offset. It is clearly visible thatimage_predictionsholds batch of predictions relevant for specific elements ofimagebatch. -

as mentioned earlier, lines

76-77construct output being single dictionary for each element ofimagebatch

Block accepting empty inputs¶

As discussed earlier, some batch elements may become "empty" during the execution of a Workflow. This can happen due to several factors:

-

Flow-control mechanisms: Certain branches of execution can mask specific batch elements, preventing them from being processed in subsequent steps.

-

In data-processing blocks: In some cases, a block may not be able to produce a meaningful output for a specific data point. For example, a Dynamic Crop block cannot generate a cropped image if the bounding box size is zero.

Some blocks are designed to handle these empty inputs, such as block that can replace missing outputs with default values. This block can be particularly useful when constructing structured outputs in a Workflow, ensuring that even if some elements are empty, the output lacks missing elements making it harder to parse.

Block accepting empty inputs

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 | |

-

in lines

20-22you may find declaration stating that block acccepts empt inputs -

a consequence of lines

20-22is visible in line41, when signature states that inputBatchmay contain empty elements that needs to be handled. In fact - the block generates "artificial" output substituting empty value, which makes it possible for those outputs to be "visible" for blocks not accepting empty inputs that refer to the output of this block. You should assume that each input that is substituted by Execution Engine with data generated in runtime may provide optional elements.

Block with custom constructor parameters¶

Some blocks may require objects constructed by outside world to work. In such scenario, Workflows Execution Engine job is to transfer those entities to the block, making it possible to be used. The mechanism is described in the page presenting Workflows Compiler, as this is the component responsible for dynamic construction of steps from blocks classes.

Constructor parameters must be:

-

requested by block - using class method

WorkflowBlock.get_init_parameters(...) -

provided in the environment running Workflows Execution Engine:

-

directly, as shown in this example

-

using defaults registered for Workflow plugin

-

Let's see how to request init parameters while defining block.

Block requesting constructor parameters

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 | |

-

lines

30-31declare class constructor which is not parameter-free -

to inform Execution Engine that block requires custom initialisation,

get_init_parameters(...)method in lines33-35enlists names of all parameters that must be provided