Inference handles the core tasks of computer vision applications

Integrate cutting-edge models, efficiently process video

streams, optimize CPU/GPU resources, and manage dependencies.

Workflows

Build complex inference pipelines with a visual workflow editor

Model Chaining

Combine multiple models in one pipeline.

Access Foundation Models

Leverage state-of-the-art models like Florence-2, CLIP,

and SAM2 for diverse applications.

Extend with Custom Code

Customize workflows and features by integrating your own

code and models.

100+ pre-built blocks

Open source models, LLMs, core logic, and external

applications.

Capabilities

Deploy using a fully managed infrastructure with an API endpoint

or on-device, internet connection optional, without the headache

of environment management

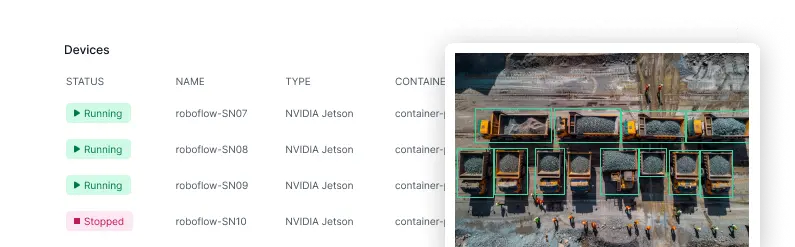

Deploy Anywhere

Run fine-tuned models in the cloud or on your hardware

for full control and data security.

Integrate APIs

Seamlessly connect with external systems to automate and

enhance your workflows.

Managed Compute

Deploy models seamlessly with managed infrastructure.

Manage Streams

Easily configure and monitor multiple devices in one

interface.

Applications

Build real-world applications with tracking, notifications,

automations, and pre-built logic.

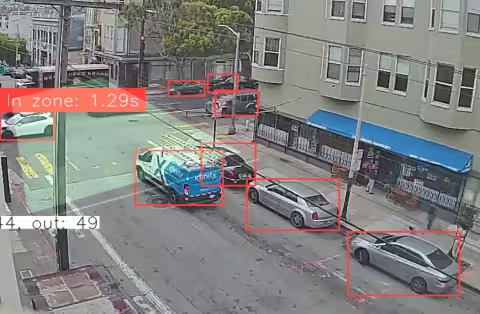

Track and Visualize

Monitor object movements, generate visual reports, and

analyze real-time data across streams.

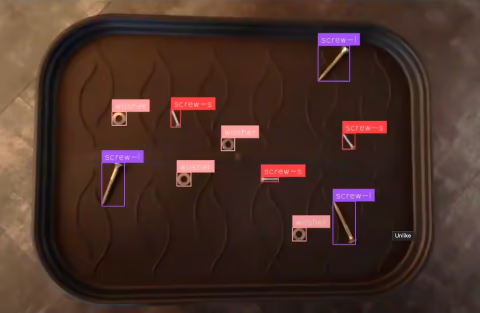

Combine ML & CV

Integrate machine learning with computer vision

techniques like template matching and barcode reading.

Event Notifications

Get real-time alerts when specific events occur.

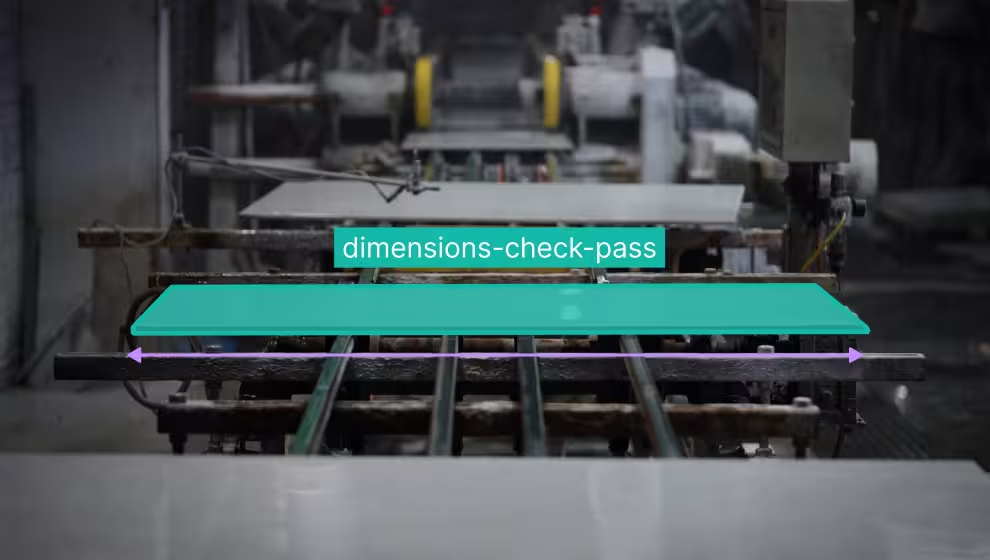

Measure Objects

Accurately calculate dimensions, distances, and object

counts for precision tasks.

Workflow Examples

See how developers are building workflows with model

chaining pipelines, visual language models, OCR, and

custom business logic.

Video Tutorials

Follow step-by-step video tutorials to build real-world

applications.

Start Building with Inference

Everything you need to start running models, exploring

capabilities, and building intelligent workflows.